Editor’s note: As retail interfaces and systems continue to evolve, the way we design retail experiences to user needs and behaviors is also transforming. In the new series “Stimulating Retail,” join award-winning sensory designer and Harvard neuroscientist Ari Peralta as he breaks down the science and tech behind some of today’s most stimulating sensory retail experiences. From 3D billboards to spatial scent to ChatGPT, meet the brands leading the next wave of multisensory retail innovations, and learn best practices to help strengthen your brand’s sensory presence in this new, multi-dimensional experience market. Read part one here, part two here and part three here.

For the past decade or so, big-name retailers have turned to designers and experiential agencies to create multisensory atmospheres in stores. Sensory design is now recognized as a powerful sales tool — the senses play a critical role in human perceptions and can create positive experiences that delight and engage customers.

Meanwhile, advances in neuroscience have expanded our understanding of how sensory processing actually works. They have helped us reframe our approach to sensory design and elevate our creativity based on the notion of cross-modality, which is the influence one sense can have on another.

This is no longer science fiction.

Today, it is possible to stimulate all consumer senses online. Sensory Enabling Technologies (SETs) enable consumers to feel textures, smell and even perceive the taste of a product. These are more than fancy gadgets; they are innovative systems that provide a glimpse of a new “embodied” online retail environment — one where brands provide digital customers with multisensory spatial experiences like those observed in brick-and-mortar stores.

In this final installment of our Stimulate Retail series, we’ll explore ways businesses, whether B2C or B2B, can fill the sensory void online with new technologies capable of transferring the sensations consumers have interacting with brands in real life into the digital world. We’ll also investigate how senses available online can be used to trigger perceptions via the absent ones. We’ll take a deep dive into emerging tech such as haptic imagery, conversational artificial intelligence (AI) and augmented reality (AR), and see how innovative brands like Apple, Disney and Journey are applying SETs to create full or partial sensory experiences that increase brand recognition and build customer loyalty online.

Key takeaways:

- SETs enable brands to craft exemplary customer experiences online by appealing to consumers’ multisensory perception.

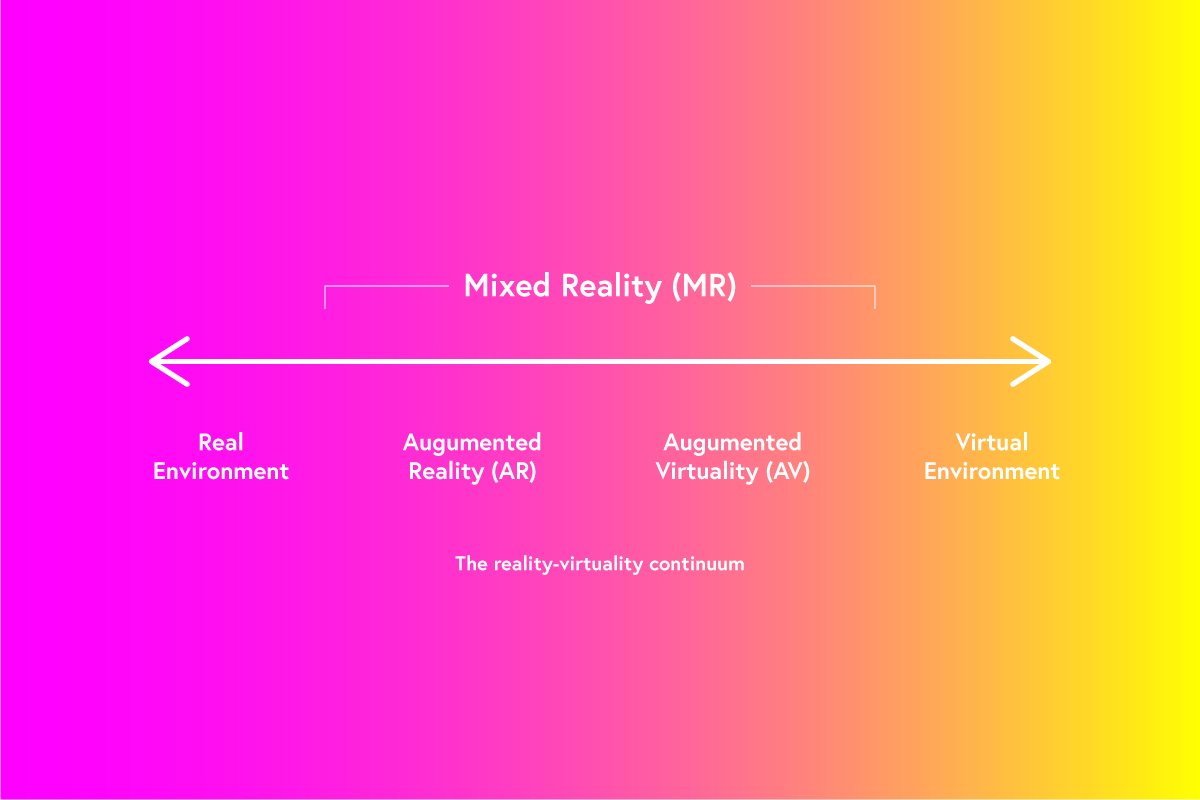

- Multisensory experiences move along the reality-virtuality continuum, so consumers can go from mixed reality (e.g. augmented reality) to fully virtual (e.g. virtual reality).

- Beyond tech, online content has the power to activate sensory memories experienced in real life, simply by conjuring fond memories with tastes, scents and colors.

74% of U.S. retailers could increase revenue by focusing on their ecommerce content.

(McKinsey, 2020)

In recent years, online shopping has grown at a tremendous rate. Online sales reached $4.9 trillion worldwide in 2021 and are predicted to see a growth rate of 50% over the following four years. That’s why in the current retail landscape, the question is no longer whether a company should be online, but how a retailer can optimally market their offerings online.

Traditionally, online retailers mainly make use of two sensory channels to offer their customer an experience: the visual sense and the auditory sense. This shortage of sensory input makes it more difficult for consumers to inspect products and services comprehensively.

Research published in the Journal of Interactive Marketing stated that the visual representation of a product on a neutral background (e.g. a 2D or 3D picture) does not always indicate how this product would look or feel in real life. Furthermore, the online store environment also lacks multisensory atmospheric cues that enable consumers to be fully immersed in a store atmosphere (Petit et al., 2019).

Multisensory experiences move along the reality-virtuality continuum, where they can go from real, through to mixed reality (e.g. augmented reality) to fully virtual (e.g. virtual reality).

Relying on these technologies can help inform the consumer about the sensory properties of a product like its texture, smell, and even taste. SETs like headphones, touchscreens, AR, VR and even a whole host of new technologies like digital/smell interfaces must be leveraged to make multisensory online experiences more engaging, immersive, informative and enjoyable.

SETs like Apple Vision Pro, Emerge and AromaJoin provide a game-changing opportunity that can enrich online retail and engage customers’ multisensory perception.

Recent progress in the field of human-computer interaction means that online environments will likely engage more of the senses and become more connected with offline environments in the coming years. This expansion will likely coincide with an increasing engagement with the consumer’s more emotional senses, namely touch/haptics and possibly even olfaction.

Apple Vision Pro

Apple recently unveiled its much-anticipated Vision Pro, a revolutionary spatial computer that seamlessly blends digital content with the physical world while allowing users to stay present and connected to others.

The tech giant views Vision Pro as a spatial computing platform, not a singular device. Its standout feature is the ability to adjust the immersion level of one’s virtual environment. It means that brands that want to build 2D overlays of collaboration software can do that, along with reimagining spatial sound experiences.

The depth sensors for hand tracking are also a worthy callout. Apple describes the hand tracking quality as “so precise, it completely frees up your hands from needing clumsy hardware controllers.” Other sensors, such as the LiDAR sensor, are used to perform “real time 3D mapping” of your environment in conjunction with the other front cameras.

Apple claims Vision Pro has a “detailed understanding” of your floors, walls, surfaces and furniture, which apps can leverage. One example Apple gave was virtual objects casting shadows on real tables, but this only scratches the surface of what should be possible.

Vision Pro creates an infinite canvas for retail experiences that scales beyond the boundaries of a traditional display and introduces a fully three-dimensional user interface controlled by the most natural and intuitive inputs possible: a user’s eyes, hands and voice.

Emerge Haptics x Disney

The rise of generative AI and its integration with various sensory inputs and outputs opens a unique opportunity in the race for technologies that help strengthen connections across distances. Emerge is building a multi-sensory communication platform through sight, touch, sound and brain activity, powered by emotion AI, also known as “sentient AI.”

Emerge is building a family of products that makes it possible for users to have tactile experiences in a virtual space without the need for gloves, controllers or any wearables. It uses cutting edge ultrasound technology that looks like a flat tabletop device and enables physical touch in mixed reality environments.

Disney recently announced its new partnership with Emerge to bring its multisensory communication platform, mainly the feeling of touch feedback, to the home. Emerge’s partnership with Disney will integrate beloved content into Emerge’s personal connection platform. Starting with one of Disney’s top franchises, fans will be able to share multisensory experiences and interact with friends and family across the world from the comfort of home. This would enable users to physically feel a “virtual high five” or their favorite superpowers with no gloves or controllers needed.

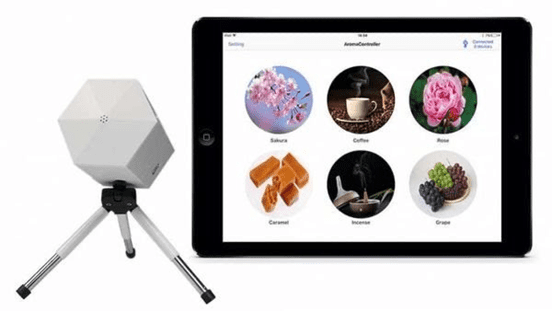

Aromajoin x Journey x Arigami

Japanese tech startup Aromajoin unveiled its scented video platform AromaPlayer at the CES 2023 tech trade show. This brings olfactory sensing to users while they watch videos.

Powered by Aromajoin’s proprietary Aroma Shooter technology, the device can instantly switch between various scents without any delay — and without any residual effect. AromaPlayer is a web application accessible via Google Chrome.

The team recently partnered with Journey and Arigami to develop a cross-sensory demo experience as part of the Retail Innovation Conference & Expo VIP experience. Journey is a global innovation and design studio that pioneers groundbreaking, next-gen customer experiences. Founded in 2022 but encompassing over 50 collective years of practice, Journey enables a deep ocean of cross-disciplinary talent to harness emerging technologies and create the future of customer experience.

RICE 2023 co-chairs Cathy Hackl and Ari Peralta co-created a multisensory digital gum experience that literally changes flavor based on the digital content that is played, with scent delivery coordinated using the AromaPlayer.

Beyond tech, online content has the power to bring up sensory memories experienced in real life, simply by conjuring fond memories with tastes, scents and colors.

Practically speaking, we understand that most brands will take their time to deploy SETs, so we wanted to explore additional ways brands can optimize the content they already have right now. This is where the latest advances in neuroscience can help us rethink digital senses altogether.

Based on cross-modal correspondences, mental imagery occurring in one sensory modality (not only visual) might result from the presentation of a physical stimulus in another. In other words, stimuli in the real world leads to multisensory representations that are often stored in the memory of the customer. Although product pictures are generally presented on websites by broadcasting sounds, brands might be able to offer a better visual representation of already associated lived experiences.

An example would be hearing the crunch and experiencing the taste of a potato chip. Exposure to product pictures or stimulating videos can engage the brain areas that were stimulated during previous experiences which, in turn, may lead to similar sensations.

In neuroscience, we refer to this phenomena as perceptual re-enactments, which have been observed in different studies including the following:

- Seeing the picture of a given food or reading its name can activate the olfactory and the gustatory cortices (González et al. 2006; Simmons et al. 2005).

- Visual and auditory information can influence product expectations (i.e., taste and quality expectations; Reinoso-Carvalho et al.) as well as product perceptions (i.e., scent perceptions: Iseki et al.; taste perceptions: Spence et al.) in other senses.

- The sight of lip movements appears to stimulate the auditory cortex too (Calvert et al. 1997).

- Watching the hand of someone else grasping food leads to activations in motor-related brain areas (Basso et al. in press).

- Kitagawa and Igarashi (2005) used sound to induce virtual touch sensations.

- Cross-modal mental imagery has been considered as a form of perceptual completion and might thus be used to fill in the missing features through the internet (Spence & Deroy 2013).

These studies suggest that through perceptual re-enactments, the consumer’s senses might be stimulated online. The perceptual re-enactments produced by images on websites can serve to fill in the missing features of the products that are not physically present. Thus, by viewing product-related images on websites, brands might define sensory expectations and even offset their need for touch.

Future Outlook: Beware of Sensory Overload

In this article, we’ve established how presenting a sensory-rich online customer experience leads to positive consumer reactions. One should also be aware that triggering multiple senses may have adverse effects, such as sensory overload, leading to negative customer experiences.

Our brains are busier than ever before. We’re assaulted with noise, bad lighting, jibber-jabber and constant notifications, all posing as information.

Trying to figure out what one needs to know and what one can ignore is exhausting. At the same time, we are all doing more. With computers, smartphones, apps, SETs and AI, we have the potential of being constantly connected to just about any place we go. This is pretty amazing, but it also can sometimes be a little overwhelming.

While the benefits of sensory integration are clear, brands need to further leverage the connection between the mindfulness that sensory design can induce and the oftentimes reckless ways individuals consume. This kind of mindful consumption is especially appreciated by Gen Z consumers and can mean the difference between creating a desire and fulfilling a need.

Consumers will likely continue this multilayered and multimodal engagement, so it makes sense for retailers and consumer packaged goods companies to rethink what an online retail experience can “feel” like and focus on wellness-led outcomes delivered using Sensory Enabling Technologies.

Forbes-recognized neuroscientist and award-winning sensory designer Ari Peralta serves as the official Sensory Design Innovation Chair for the 2023 Retail Innovation Conference & Expo. No stranger to RICE, Peralta champions ways to use the senses to elevate quality of life and promote self-awareness through Arigami — an innovative research consultancy dedicated to multisensory research. The “40 under 40” Harvard alumnus makes a positive impact through interdisciplinary design projects that focus on using the senses and technology to support people’s mental health.