2020 could easily be considered a breakout year for social commerce. Consumers reported spending 45% more time on social media in Q4 2020, and orders coming from social-referred channels (including paid ads and organic posts) grew by 50% year over year, according to data from Salesforce.

As time spent on social apps peaked during the pandemic, overall engagement skyrocketed. Arguably the two leaders in the social commerce movement, TikTok and Instagram, have approximately 689 million and 1.07 billion monthly active users worldwide, respectively. The opportunity to use these channels to share relevant, engaging and entertaining content is clear, but brands and their content creator partners are struggling with social networks culling content that doesn’t fit standard definitions of beauty and health. Further complicating the issue is social networks’ use of AI/machine learning-powered algorithms, which are necessary given the huge volumes of content these networks offer.

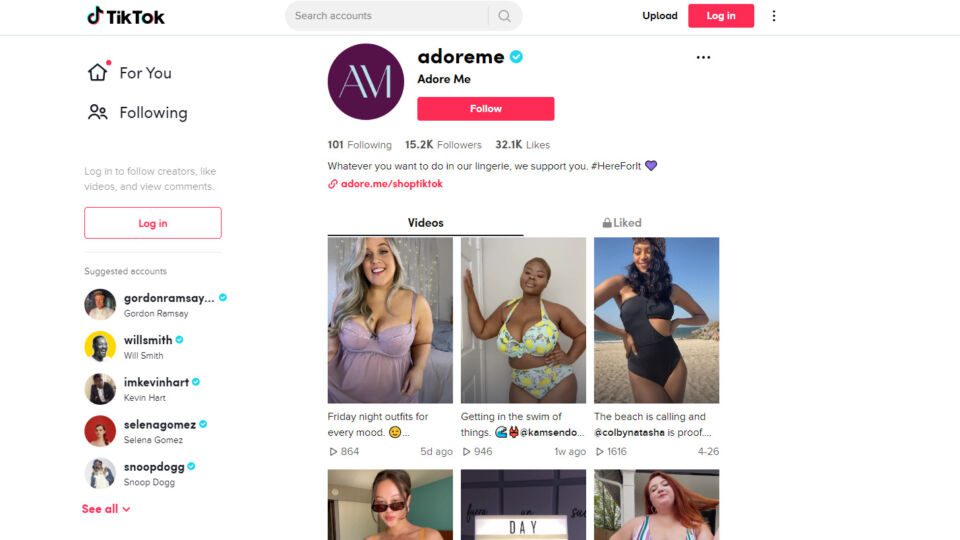

Experts say that implicit biases are built into algorithms and are further strengthened by consumers’ activities on these platforms. In addition, these networks change them so often that it’s difficult to know what will “make the cut” and what will be dropped. These are key reasons why brands including Adore Me are seeking to drive more transparency around social networks’ content moderation practices. It all started in February 2021, when the direct-to-consumer (DTC) lingerie brand took to Twitter to share a detailed statement on social media moderation practices — specifically those on TikTok. Adore Me shared numerous examples of TikTok content that included plus-sized and handicapped bodies, as well as Black women and creators of color, being removed from the social site.

Adore Me heard directly from several members of its influencer community that their videos had been taken down, and “that pushed us to take a stand,” said Ranjan Roy, VP of Strategy at Adore Me in an interview with Retail TouchPoints. Roy defined it as a “grassroots effort” sparked by the social media manager who manages the TikTok account. “It was then impromptu Slack discussions between our marketers, our engineers, and people like me that kept the conversation moving. Even when it came to writing the thread, our management effectively let the team feel empowered to do so, and made it clear they had everyone’s back in case of any fallout, because this was not without risk.”

When asked to comment for this piece, a TikTok spokesperson disclosed that 89,132,938 videos were removed globally in the second half of 2020 alone for violating Community Guidelines or Terms of Service. This total accounts for less than 1% of all videos uploaded on the TikTok platform. The spokesperson added that when a video is removed for violating Community Guidelines, creators are notified and can appeal the removal. Videos are reinstated if moderators realize upon further review that an error was made.

Fluid Algorithms, Use of AI Create Unique Challenges

Discussions surrounding organic content moderation are hardly new. Instagram has been the center of discussions surrounding shadow banning, which limits an account’s organic content reach, and has frustrated some creators with its ever-changing algorithms. A leaked TikTok moderation document shared by The Intercept showed guidelines that impacted content visibility, touching on everything from physical features to environmental factors like the cleanliness of a room. Later in 2020, the company revealed more details about the algorithm’s inner workings to put users and businesses at ease, and even announced its plans to improve advertising policies for weight loss and diet products.

“These algorithms are always fluid,” noted Matthew Maher, Founder of M7 Innovations in an interview with Retail TouchPoints. “While moderators have guidelines they need to follow, [these companies] want to use AI/machine learning and algorithms to automate the process.” It’s impossible for humans alone to moderate the sheer amount of content being created on these networks every minute, let alone every day.

However, there are a lot of “dark corners” in these algorithms that reveal subliminal bias, Maher explained. “There could be subtle nuances like cracks in a wall or garbage on the floor, and they’ll assume things about the creator, which [in turn] will impact visibility.”

These social media algorithms are further refined based on user engagement. What we upload, like, share and repost sends cues to the apps, essentially saying: “Show me more content like this!” Kate O’Neil, author of the book Tech Humanist and an expert on the convergence of digital and physical life, explained: “Algorithms need to be trained on some kind of data set, so our participation in these platforms is feeding the beast, in a sense. It’s partly the data we feed into the system — the photos we upload, the games we play and the memes we share under certain hashtags. We label them with contextual information, using filters, etc., and we’re potentially training algorithms to know how old we are or even understand what we really look like under the camera filter.”

A Catch-22 for the Authenticity Era

Social media has been a driving force for the authentic marketing movement. In fact, a staggering 90% of consumers say authenticity impacts which brands they like and support, according to research from Stackla.

Brands like Dove and Aerie have been lauded for their body positivity and for nixing retouching in marketing campaigns, which have used social media as a key element of their tactical mix. A spokesperson from TikTok shared a few key campaigns in which brands successfully touted this authenticity by promoting self-love, mental health and self-care. These initiatives featured JanSport, Burt’s Bees, Marc Jacobs and yes, even Aerie.

However, it is brands’ amplification of user-generated content (UGC) and influencers that is helping build the more authentic brand stories that cultivate loyal communities. Influencers and other social content creators play such a central role to the Adore Me business that it has developed a proprietary platform to support them.

The retailer’s team launched Creators, a dedicated space where Adore Me can manage micro-influencer relationships, share campaign details and collect content from partners in exchange for rewards and perks. “To us, the relationship with each individual influencer is more important than the platform itself, and we’ve already seen success working with individuals across platforms,” Roy explained.

However, Adore Me has faced an ongoing tug-of-war between the benefits of these diverse partnerships and the discouragement that comes when content, which takes time and money to create, is removed. “We’re very adamant about staying true to our core value of inclusivity,” Roy explained. “Adore Me was the first lingerie brand to introduce extended sizing across all our products, and we work to make sure our social feeds and imagery represent this. It’s generally not a problem, and in fact, we’ve seen [that] presenting a more diverse group of models and influencers resonates more strongly across most platforms.”

These circumstances impact the livelihood of content creators, who are sometimes paid based on the amount of engagement their posts and videos get. Their broader social track record is also taken into account, so if content is removed or their account is disabled, it limits their audience reach and can impact whether a brand wants to work with them.

“A lot of the time the conversation around things like algorithmic bias can feel incredibly theoretical, but there are such real, human impacts,” Roy explained. “Our influencers and models would reach out to us and even apologize for their content being taken down, and they would sometimes even uncomfortably ask if that meant they needed to do another project, as if it was somehow their fault.”

There is also a longer-tail impact for brands: if a brand has a goal of challenging mainstream beauty standards and embracing diversity, its feed needs to represent those values. If content is removed, users may only see a limited range of content and representatives, which ultimately impacts brand perception.

“Even if a brand is creating [and sharing] a bunch of content that is diverse and inclusive, but their more diverse content is getting throttled, that’s what’s going to be visible,” said O’Neil. “Consumers are going to see the more mainstream beauty ideals that aren’t diverse and inclusive, and that’s going to reflect poorly on the brand in the long run. It means the values and the beliefs aren’t really there.”

Accountability and Transparency Come to the Forefront

Since their “viral moment,” all areas of the Adore Me business, including the executive team, have focused on ongoing, transparent conversations around the role of social media in our daily lives, as well as the impact of spotlighting diverse bodies in content. For instance, the company hosted a live panel conversation on Instagram to bring its broader community into the conversation. The company also has made it a common practice to share content from creators on Twitter if they had been removed by other platforms.

“We have been engaging with various civic groups after the thread went viral because we don’t want this to simply be a flash in the pan bit of attention,” Roy said. To that end, Adore Me is doubling down on its typical practice of monitoring algorithm changes and new content creation vehicles to determine where to spend its time and budget.

Experts agree that it isn’t realistic to encourage consumers to stop using social networks, or for brands and retailers to stop creating content or advertising on them. It is possible, however, for more ongoing dialogue to take place around what content is being removed, why, and what adjustments can be made to ensure a better experience for users.

“The decisions made by platforms, no matter how seemingly problematic or arbitrary, have generally been accepted by brands — usually we would just try to adapt our own behavior,” Roy noted. “The larger climate feels like it has shifted, where brands, and the public at large, can demand a bit more accountability and transparency from the platforms themselves.”

Demand for accountability also drives a greater need for transparency on behalf of social networks, according to Maher: “Algorithmic transparency is the key to everything. If you show everyone these signals, smart enough developers will be able to identify why and where this bias happens. But we can clean things up if we’re able to see more of what’s inside.”