According to recent estimates, there are over 26 million ecommerce sites globally. Still, despite the convenience and wealth of choice that online shopping offers (and shoppers love), these sites are only responsible for a modest portion of all retail sales. In the U.S., home to over half of online retail sites, the proportion is only about 16%, per the U.S. Census Bureau.

Among the biggest reasons there exists such a discrepancy between online and offline spend can be boiled down to one word: experience. Physical stores have had the benefit of decade upon decade of experimentation and optimization. They are designed to entice us and to catalyze the release of “happy” hormones, like endorphins and dopamine, in our bodies. They also allow us to touch items, try things on to see if they fit and ultimately drive us to buy.

While the ability to search and compare products online can create an excitement akin to playing a sport, it can’t compete when it comes to giving the ability to pick items up, feel the quality and, of the utmost importance in fashion retail, try things on. Which brings in another challenge of the ecommerce world, returns. In March 2024, the International Council of Shopping Centers reported that consumers returned 22% of products they bought online from apparel retailers. That’s more than 3X the rate for in-store purchases.

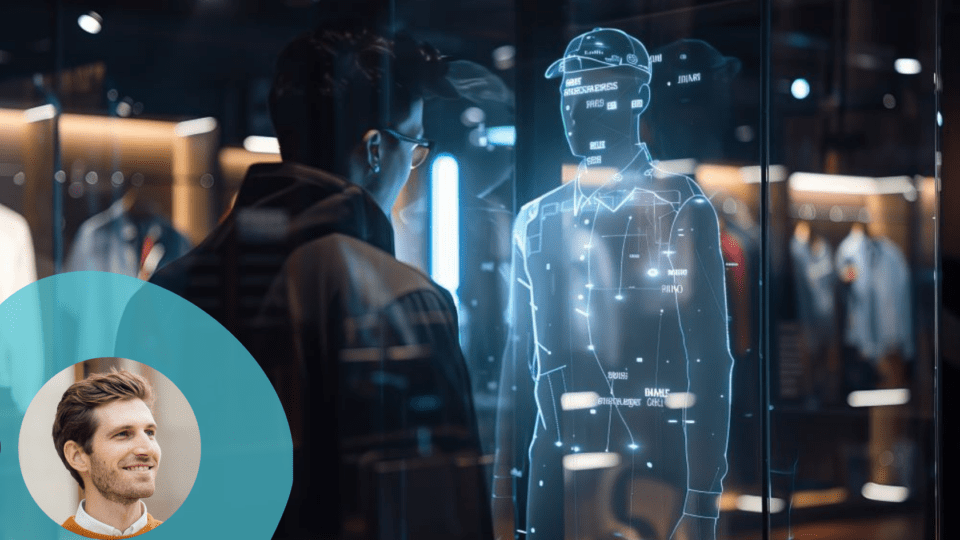

Two of the top reasons for these returns, that the item did not fit (50%) or was not what the buyer expected (42%), can be mitigated with virtual try-on technology. Still, after 20 years of development and experimentation, we haven’t gotten them right. Here are some of the issues that each side has experienced that have delayed widespread adoption, and the lessons we’ve learned that are helping build a next generation of solutions that are better for everyone.

Implementations aren’t Consumer-Friendly

For technology that is supposed to improve the customer experience, many attempts at virtual try-ons miss the mark.

Last fall, industry publication Fashionista did a hands-on test of “the best VTO technology offerings [they] could get [their] hands on in fashion retail,” and the results were not great. In one case, the user was asked to upload a full-body photo, but since she was reluctant to submit a nude selfie, her clothes showed through the results, rendering it all but useless. In another case, she was asked to input a series of measurements, which can be perceived as both time-consuming and intrusive. From the merchant side, collecting that level of personal information opens the door to data governance issues.

Lesson learned: Keep it simple. Most of our online activity revolves around two physical actions: clicking and swiping. Anything that we ask of the user that goes beyond those two, routine things can be frustrating, off-putting, confusing and a host of other things that are most definitely not enticing.

The Results Look Fake

Imagine you’re shopping online. You invest the time and effort it takes to complete a virtual try-on, and you love what you see. You buy the item, then anticipate its receipt. When the package arrives, you tear into it and…it doesn’t look anything like the online rendering. Now, you are even more disappointed. Not only do you return the item, but you vow not to shop at that merchant again. This is definitely not what the retailer intended.

There are a number of issues that can cause fake-looking output, but one common one stems from using consumer-generated input, which has been a common approach to virtual try-ons. Layering on top of a photo submitted by the shopper may be an OK solution for things like makeup, but, especially when dealing with soft goods like clothing, lighting has a tremendous impact on the look of the garment. Retailers spend small fortunes getting photography just right. To then superimpose the studio-shot, carefully lit packshots of garments over a low-quality, user-generated selfie taken in completely different conditions is going to yield odd, unrealistic-looking results.

Lesson learned: The best outputs come from great inputs. Until we have technology that can accommodate for the myriad of variables that go into a photograph, it’s best to keep the inputs consistent. For now, that means retaining tight control over the visuals used.

Instead of showing the item on a specific person, aim to offer options for people like them. Brands and retailers can shoot models of various sizes, body types and skin colors in the same studios and under the same conditions as the garment shots. This will result in more realistic renderings and happier buyers in the long run.

The Technology Requirements for 3D Renderings are Too High

The industry is now blessed with some incredible, true-to-life 3D solutions that are changing the game for manufacturers and designers. When it comes to employing these solutions at scale for ecommerce in the fast-moving, ever-changing fashion category, however, it gets really expensive.

It’s also time-consuming, often requiring 10 or more photos of an item, then 20 to 40 minutes to convert those photos to 3D models. Technology requirements also can be prohibitive from the consumer side, for example requiring faster internet speeds and more computer processing power than they have at their disposal.

Lesson learned: We can walk before we run by using 2D instead of 3D. Some of the most successful virtual try-ons to date are in categories like cosmetics and eyewear. These are done almost exclusively with less cumbersome (and less costly) technologies.

While we may not yet have perfect virtual try-ons, we’ve come a long way in 20 years. And we’ve learned a lot. For brands and retailers, the most important thing is to keep the customers’ needs at the heart of your implementation. Give them an experience that doesn’t ask a lot of them and offers a result that is accurate and dependable enough to base a purchasing decision on.

Someday we’ll have true-to-life, entirely personalized systems, but for today, it’s important to act tactically and realistically when it comes to decisions between the quality of the rendering and the capacity to scale.

In other words, keep it simple, and it’ll be more compelling.

Maxime Patte is the CEO and co-founder of Veesual , a Paris-based virtual try-on platform changing the way consumers experience digital retail. A serial entrepreneur, Patte has a proven track record of launching and growing innovative tech companies as well as building high-performance teams. He holds prestigious degrees from CentraleSupélec and Sciences Po.