Eye tracking is a common way for retailers to research buying behavior, but until recently eye trackers have been extremely limited in scope. They can only track eye movements on 2D surfaces such as videos and images.

is a common way for retailers to research buying behavior, but until recently eye trackers have been extremely limited in scope. They can only track eye movements on 2D surfaces such as videos and images.

This is leaving retailers in the dark when it comes to truly understanding customers. Recently, the support of eye tracking by virtual reality (VR) headsets has made a new dimension accessible for data collection. VR gives us the ability to go beyond the surface and get deeper insights from 3D environments. With people adjusting to COVID-19, shopping habits have changed around the world. According to a study by Coresight, “27.5% of U.S. respondents [are] avoiding public areas at least to some extent, and 58% plan to if the outbreak worsens.”

Now is the time to take another look at how VR can help you deal with these changes by better understanding how people behave under these conditions. This article will highlight the benefits of eye tracking in 3D environments such as VR, and how it’s revolutionizing consumer research.

The Limits Of 2D Eye Tracking

The problem with conventional eye trackers is that they work by overlaying eye movement data on top of 2D surfaces. There are a number of extreme drawbacks with collecting data this way:

- It lacks depth;

- It’s not scalable; and

- It misses important insights.

Lack Of Depth

Fundamentally, 2D content in the form of videos and images lack depth. This makes it very difficult to assign context to what’s going on in the footage. How do you differentiate between the product, signage or a shopping cart?

Without context, there’s also no way to tell if the participant looked at the signage or the person walking by. In contrast, there are layers within the 3D environment that make it easy to assign context to products in VR. This means it’s simple to tag objects and differentiate stimuli. Once objects are tagged, data is automatically recorded when participants interact or focus on them.

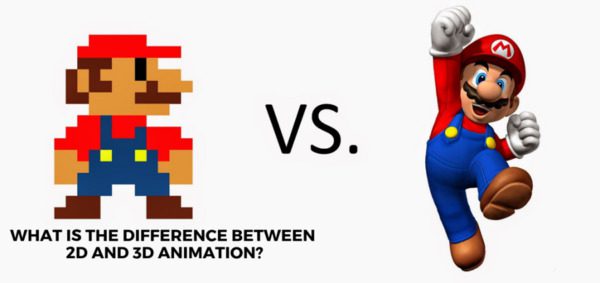

To illustrate this concept, imagine playing Super Mario on the NES and playing Mario on the Nintendo Switch. Don’t get me wrong, the original Super Mario is a classic but it’s a vastly different experience. The Mario on the left is flat, there’s nothing more to uncover from the image.

Meanwhile, the Mario on the right has thickness and depth to his features. It is also possible to look at him from different perspectives. If you asked participants to describe him from the front and from the back, you’d receive very different conclusions.

It’s time to stop looking at things in 2D and to extend your research. This way, you can capture deeper insights into buying behavior that would’ve been missed before.

Not Scalable

The conventional way to assign context in 2D environments is by marking Areas of Interest (AOI) onto the content. This method is labor intensive and hard to scale. Someone has to:

- Review the video footage from beginning to end; and

- Mark AOIs around relevant objects such as products or signage as they appear in the video.

Afterwards the footage and eye tracking data has to be reviewed to determine which AOIs were noticed by the participants. The only alternative to manually processing AOIs is by applying computer vision and AI to recognize objects in the footage. This is expensive and difficult. Otherwise, there aren’t any simple ways to assign context at scale to 2D content.

Misses Important Insights

Another shortcoming is that 2D limits the way participants can interact with the content. They are only able to passively interact with products, for example:

- Notice the product; and

- Click on the product.

3D environments allow participants to actively engage with the product in six ways:

- Notice a product;

- Pick up the product;

- Look at the front of the product;

- Turn the product around;

- Look at the ingredients; and

- Purchase the product.

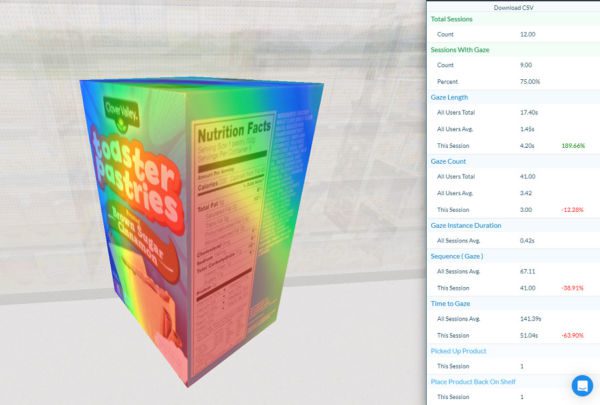

This provides an extra dimension of additional insights that can be gathered from the study. It’s the difference between seeing something and acting on it. In the image above, we can also see a list of metrics that are captured when participants interact with the product.

We can find out:

- How long the product was looked at;

- What order it was seen in relation to the other objects;

- How many times it was seen across participant sessions;

- How many times it was purchased; and

- How many times it was picked up but NOT purchased.

A study conducted by Accenture, Kellogg’s and Qualcomm found that the use of eye tracking in VR increased total brand sales by 18% during testing. The biggest difference is how eye tracking is used in 3D environments and 2D surfaces.

- 2D surfaces: Eye tracking is used in isolation. Researchers want to understand whether a product or sign was seen through the use of heat maps or AOIs; and

- 3D environments: Eye tracking is used in relation to other actions done by the participant. It provides context to other inputs like biometrics, sequence of actions, duration of actions, etc.

This leads to a significant difference in the insights gathered from a 2D surface and a 3D environment. Another benefit of VR is that it creates a much more realistic shopping experience for participants compared to traditional eye trackers. They can move through the aisles and interact with the products on the shelves. Movement is also natural and not limited to a desk.

Virtual shopping experiences may also provide more accurate insights into real behavior.

Looking Forward

As consumers tighten their belts, retailers know that it’s more important than ever to reflect on their strategies moving forward. But eventually, COVID-19 will pass and we’ll be faced with a different retail landscape. By understanding how consumer behavior changes, retailers can better position themselves to address these challenges.

The use of eye tracking in 3D environments will be an invaluable tool for getting deeper insights about their behavior. Will your business be ready for the post COVID-19 consumer?

Albert Liu is the Product Marketing Manager at Cognitive3D, a VR/AR analytics platform that captures spatial data and turns them into actionable insights. The technology has developed a new language for these types of insights to better quantify user behavior. Cognitive3D is focused on helping enterprises measure success from immersive experiences.